The Subtle Magic of Softmax: Unveiling the Gibbs Distribution

Disclaimer : I am not a physicist.

It’s funny how often we use softmax without realizing we’re invoking a physical principle from statistical mechanics. But here’s the kicker: when we softmax our logits, we’re actually sampling from a Gibbs distribution. Now, why should you care? Let me break it down:

-

Maximum Entropy Principle: The Gibbs distribution isn’t arbitrary - it’s what you get when you maximize entropy subject to energy constraints.

-

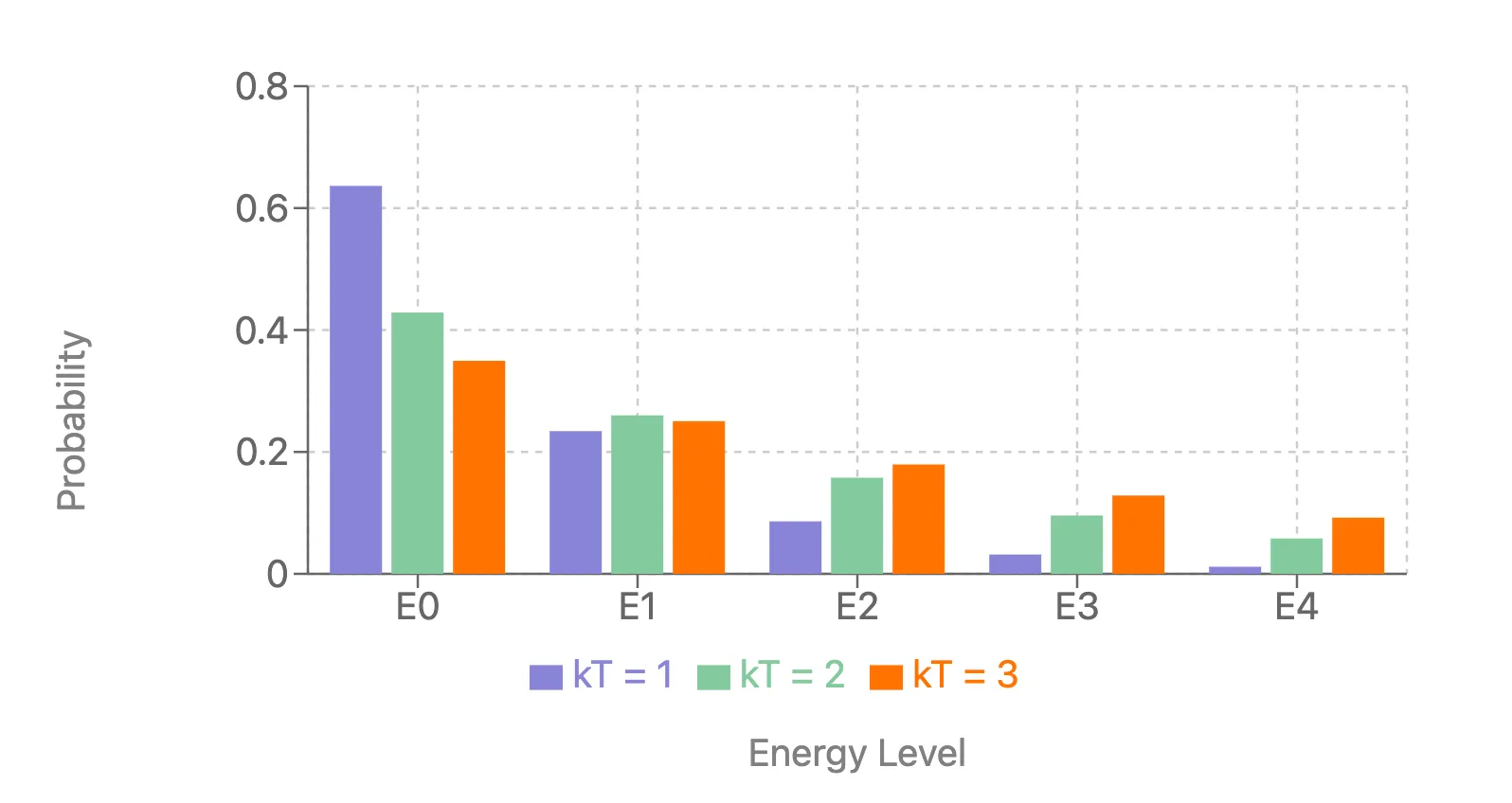

Temperature Control: Remember that temperature parameter in the Gibbs formula? It’s usually set to 1 and forgotten. But by exposing it, we get a knob to tune the “decisiveness” of our model. Low T? Sharp decisions. High T? More exploration. It’s like having a built-in simulated annealing mechanism.

- Bridge to Physics: Recognizing the Gibbs distribution opens a two-way street between machine learning and statistical physics. We can borrow ideas from physics (like mean field theory) and potentially contribute back.

Let me convince you that Gibbs distribution is actually, is the one with the max entropy.

System States

Consider a system that can exist in a discrete set of states labeled by . Each state has an energy .

Probabilities

Let be the probability of the system being in state .

Then, the entropy of the system is given by:

where is Boltzmann’s constant.

Constraints

The sum of all probabilities must equal 1.

The average energy of the system is fixed.

Lets maximize the entropy with respect to the probabilities while satisfying the two constraints.

We are going to use Lagrange multipliers and to incorporate the constraints into the optimization.

Lagrangian Function

We need to find the probabilities that maximize . So we simply take the partial derivative of with respect to each and set it to zero:

Rewriting the equation,

Divide both sides by ,

Exponentiate both sides to solve for ,

Define a constant ,

So,

Use the normalization constraint to solve for :

Therefore,

where is the partition function:

Substitute back into the expression for :

This is the Gibbs (Boltzmann) distribution.

Relationship Between and Temperature

In thermodynamics, the Lagrange multiplier associated with the energy constraint is related to the inverse temperature. Specifically:

So,

Substitute back into the expression for and :

And

By maximizing the entropy with respect to the probabilities under the constraints of normalization and fixed average energy, we derive the Gibbs distribution: